2018 Data Futures Workshop

Presentation 1

Can you spare some change? - Daniel Norton, Assistant Registrar (Data Quality and Returns), Loughborough University

Presentation 2

Data Futures at the University of Leeds - Pam Macpherson Barrett, Head of Policy, Funding and Regulation, University of Leeds

Presentation 3

The do (almost) nothing approach - Mick Norman, Data Quality Manager, University of Kent

Presentation 4

Data Futures at Teeside University - Marian Hilditch, Head of Data Quality, Teeside University

Everything I hate about Data Futures

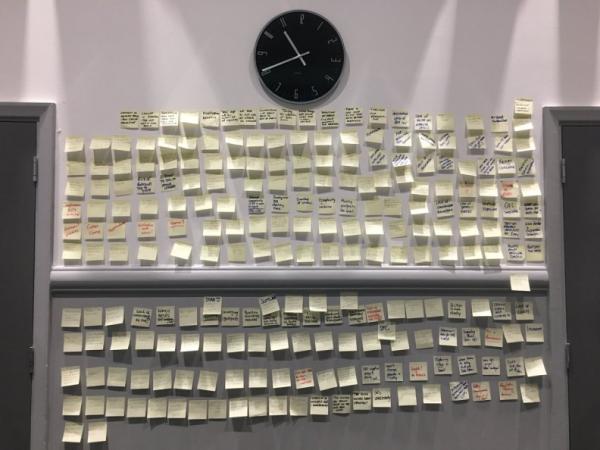

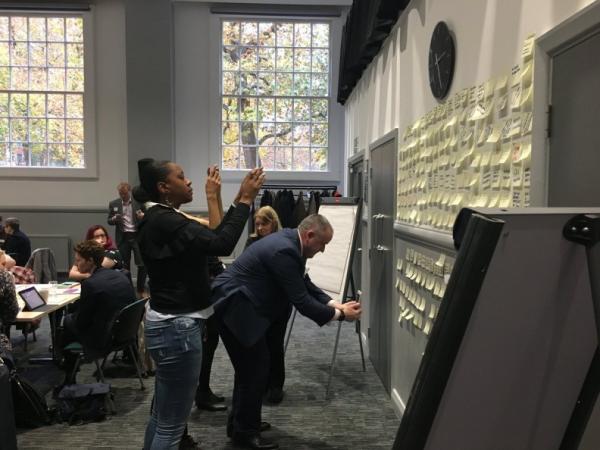

We asked delegates to tell us what they hate about Data Futures. The event was attended by 117 total delegates from 64 Higher Education Providers, 5 suppliers and HESA. We invited honesty. The rest of the day would be looking at potential solutions, but that first hour was all about getting things off our chest. Delegates produced 249 post-its which they put up on The Wall.

Many spent time pondering and photographing The Wall, nodding along, noticing similarities, identifying points we’ve been aware of but may not have previously seen articulated.

We’ve attempted to group these messages into 13 categories. The categories are shared alphabetically, but there is no order to the contents. Repetition reflects a different entry.

Browse The Wall below and join the conversation on twitter.

The burden

- It’s not reduced the burden at all.

- More of a burden, not less.

- The data burden has not decreased.

- Burden not being reduced.

- The data burden has not decreased.

- There will be a huge lag of time before institutions see the supposed benefits - and not for Registry.

- Why is HESES still happening?

- Too much to do without Data Futures!!

- Timescales and resource to do DF at same time as HESA 2018/19.

- Too many changes at the same time (eg HECoS).

- No consolidation of returns.

- We’re not moving from a perfect system = many muddles and workarounds at universities - this is another burden on top. So much change happening together.

- Overlap of returns.

- Apprenticeships.

- Still dealing with existing operational HESA activities on top of Data Futures

- Reduced burden is no longer being mentioned.

- Lots of things happening at once: REF, TEF, Fees Review, Data Futures

- Doing HESES as well as in-year HESA return.

- ITT timescales.

- Double reporting for Degree Apprenticeships through ILR and also through Data Futures - not reducing the burden.

- Not reducing the burden.

- Disappearing benefits: will replace 500 collections or 3???

- HESA/HESES: why two returns!!

- No longer see the benefit of huge extra time (staff, development etc) in transition.

- Missed chance to bring ILR into Data Futures.

- No clear price increases for HESA. Are costs going to increase x3 due to the return now being 3 times per year?

- Demands to keep 2017/18 + 2018/19 data quality high during Data Futures integration.

- Increase burden on institutions.

- Increasing the burden!

- Could ILN [ULN?] solve all of this.

- Original remit was ‘quick wins’ and ‘reducing the burden’, which is not the case.

- Promised flexibility is not coming true - eg no mechanism for new HECoS codes.

Buy-in

- Explaining why is hard.

- Management not bought in.

- No one cares about Data Futures (or more to the point, no one with the money/power/influence cares about Data Futures).

- Lack of engagement at a senior level at the University.

- Constant change for Faculty staff.

- Making the rest of the Uni aware!

- Lack of understanding by Senior Management Teams.

- Senior Management not understanding the burden of it on staff & resources.

- Lack of Senior Management understanding.

- Lack of Senior Management buy-in to readiness.

- Relationship with Schools.

- Internal unpopularity if DF is seen to impact negatively on other areas.

- Difficult to sell to senior leaders.

- Buy-in from other depts - don’t understand [what] the change means. Senior.

- “Don’t you just press a button?”

- Institution. Lack of buy-in.

- We have to get lots of others to change.

- Getting management buy-in is hard.

Collaboration

- Lack of information available from HESA & OfS.

- Not enough info to feed back to consultations if not involved in Alpha/Beta.

- Lack of stakeholder engagement.

- Institutions have lost their say - feel less influence.

- Consultations - we aren’t able to have an impact.

- Sector views not listened to.

- Practitioners don’t feel listened to.

- Decision making not always transparent.

- If you aren’t in Alpha & Beta you are forgotten - and we’re not in there for a reason! This could be catastrophic.

- Can’t respond to consultations without detail of field coverage & validation.

- No information from Alpha pilot.

- HESA website. Difficult indexing & finding up-to date information.

- Radio silence from HESA/OfS. Too much change with HEFCE & OfS.

- HESA more interested in larger suppliers/university.

- Other data collectors not fully buying in.

- Have purpose & customers changed?

- Ambitions of HEDIIP (eg shared governance) not realised.

Data model

- New data items, but not enough detail to train.

- Keeps changing.

- Placements.

- Some detail not there.

- Off venue activity! Interpretation of this.

- How will in year submission work with retrospective actions? (eg suspensions)

- Lack of clarity on tolerances.

- Quality rules not there.

- Changing data model (needs to be finalised)

- Changes to the data model.

- Implementation is closer but guidance still not there.

- Changing requirements.

- Star J: data not fit for purpose

- Incomplete

- Lack of clarity

- Module start & end dates. Why?

- Date definition? What is a “start date”?

- The data model keeps changing!

- Hidden burden in data model

- Detail doesn’t seem to be available

- The goalposts keep moving - or are not ready. Software suppliers can’t deliver yet.

- Data model still changing. Doesn’t fit how we work in most places.

- A lot of uncertainties.

- Not seen quality rules makes building new, compliant systems difficult.

- Minimal quality rules.

- Changing specification.

- More changes expected during Beta phase.

- Lack of detail - fields.

- Data model for OU students not yet agreed.

- Moving goalposts (DQ spec)

- Trying to manage a changing student records system & a changing specification.

- QUALENT3

- Goal posts always moving & having to redo work already started.

- No printable data definition!

- No quality rules yet!

- What is the final version of the coding manual?!

- Uncertainty over final spec.

- Lack of clarity with definitions.

- Volume of data.

- Huge number of keys.

- Moved from original design.

Data quality

- Regular returns & challenge of data quality.

- Data quality challenges.

- Inadequate DQ checking time.

- There is less scope to ‘fix’ the data before submission.

- ‘HESA’ staff will lose control - but not responsibility.

- Decentralised data.

- Not clear how to update information - and how to identify what has changed!

- Unrealistic expectations on data quality.

- Unrealistic quality expectations.

OfS

- Differences in data for OfS + HESA.

- Impending threat from OfS.

- New OfS jargon - ‘other undergrad’

- Difference expectations between HESA/OfS.

- OfS voice too loud.

- Not clear what OfS want.

- OfS not buying into concept of Data Futures.

- Tension between HESA/OfS re spec.

- OfS takeover!

- Late engagement by OfS. Model still changing.

- Late ‘real’ engagement by funding councils.

- It doesn’t fit into the OfS Data Strategy (or does it - no one has actually seen it) and that doesn’t help.

- Unrealistic expectations from government/OfS.

- OfS driven changes (recording FTE)

Onward use

- What will data be used for?

- How will it affect externally reported data eg League Tables?

- How often is data released to externals from Data Futures. Mid year data releases for league tables?

- What will happen to data in transition year? League tables?

- Changes in onward use - uncertainty.

- Lack of understanding of what customers will do with our data & when to risk assess implications of data quality (or lack of).

- Lack of data continuity.

- No information on onwards uses of data.

Readiness

- Sector not ready.

- Difficult to change culture.

- Too many questions, not enough answers.

- The culture change required in institutions.

- Culture change.

- How unprepared we feel.

- The uncertainty.

- Actual return more complicated & fear of the unknown

- Unknown!!

- More complex.

- Complexity + confusion.

- Culture change.

- How to quantify how much extra work there’ll be.

- Changes in quality rules - how can we prioritise.

- Change is painful - the old way is well known

- Lack of implementation impact guidance.

- Scope of change.

- Overwhelming: where do I/we start?

- Uncertainty.

- “It can’t be done!”

- It’s a boondoggle.

- Belief that it will be postponed.

Resources

- Senior Management putting pressure on staff to get it right.

- Person resource - where is it going to come from?

- Work! + no resource.

- Need to change processes: costly.

- Extra data items to return, increase workload.

- Increased costs to institutions for staffing this.

- No resources.

- Not sure how much extra resource is needed!

- Pressure on resources.

- Pressure on budgets.

- Having to fit the work around existing workload.

- Benefits are long term, pain is short term.

- Burden too high.

- Burden too high.

- Migration may be too complex and need more resource than expected.

- Increased burden on Returns staff.

- Resource? Planning.

- Lack of resources, time, staff, etc.

- Workload.

- Lack of dedicated resource.

- Sqeeze in finances in the whole HE sector - little to spend on extra resource for Data Futures.

- Lots of new jobs =/= lots of new knowledge. Resource.

- Vulnerable to losing staff.

- NOT RESOURCED!!

- Lack of resource.

- Lack of data knowledge in admin teams.

- Lack of faith in internal skills.

- Lack of responsibility for data entered/held by HEP.

Scotland

- SFC - engagement with Scotland

- Is OfS driving policy in rest of UK?

- Funding council involvement.

- Scotland feel left out

- Will needs of SFC early stats + final figures be covered?

- Lack of SFC engagement - has use case been defined for all fields?

- Scotland: reducing the burden? Not engaged enough!

Systems

- Our systems are heavily based on the old HESA ways.

- SRS suppliers aren’t ready yet…

- SRS uncertainty.

- Cannot migrate software supps.

- Software suppliers. Systems not ready.

- Not enough info from Tribal!

- Relying on developers!

- In-house delays in change of software.

- We have no records system to run it from.

- No information from Tribal.

- Implementing SITS - chicken/egg?

- Lots of system interdependencies - difficult to change things in isolation.

- High dependency on student record system providers - when will upgrades be available?

- CHANGING SYSTEMS AARGH

- Volume of system changes required.

- Will software be available from software houses.

- Software suppliers delayed.

- In year submission: how will it work? Implications on systems.

- Software systems being able/ready to provide training.

- Lack of student records system provider engagement.

- Reliance on software suppliers to extract data.

Theory & practice

- Smaller institutions batch student returns work up - not compatible with Data Futures environment.

- Increasingly invasive: students + institutions.

- Regulation driving business.

- Real life is more messy than the data customers think.

- Assumption that all data is in a single place, eg specialist placements like medicine.

- Structure further away from HEP business, not closer.

- Multiple course deliveries for different year abroad options - adds no value, can just report at student level.

- Definitions don’t fit with sector view - course without level.

- Timing for collection of some data items in reference periods not “business-like”.

- Current business processes built around model that is now upended.

Timescales

- Rumours (especially of delays)

- The delays.

- Delays.

- The timescales are no longer realistic.

- Shifting timelines.

- There’s no more time/capacity to do this - it’s an as well - and hard to get new resource in the uncertain atmosphere. Too many other changes as well.

- Slippage! Alpha delayed. Beta delayed.

- Staffing/structure changes at HESA + OfS has knock on effects to timescale.

- Risk of boredom due to delay.

- Push back of beta to Jan has caused internal friction.

- HESA running behind.

- Timeframes - are they achievable.

- HESA deadlines moving but ours are not.

- Timescales squeezed so more done in parallel.

- Timeline (still got HESES etc)

- Timelines for development.

- Conflict with other priorities.

- Same time as TEF, Graduate Outcomes pilots or first years.

- Timings not really thought through.

- Feeling squeezed between delays/changes + immovable deadlines.

- Not ready. No data platform. No info re Beta. Delays.

- What happens if not ready during trial period?

- Changing goalposts.